Key Takeaways:

- AI models now shape brand perception for millions of users who ask ChatGPT, Gemini, and Perplexity for recommendations

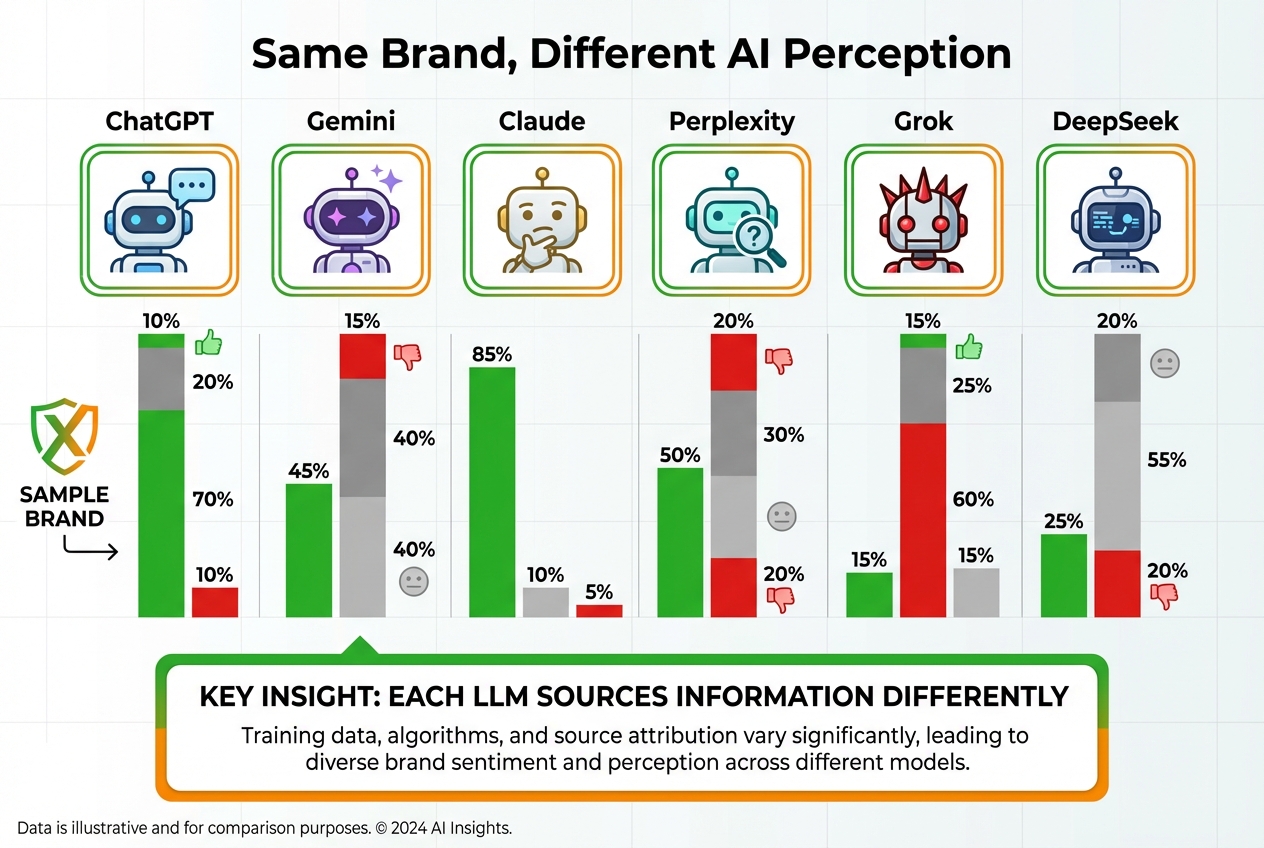

- Each LLM can perceive your brand differently based on its training data and source preferences

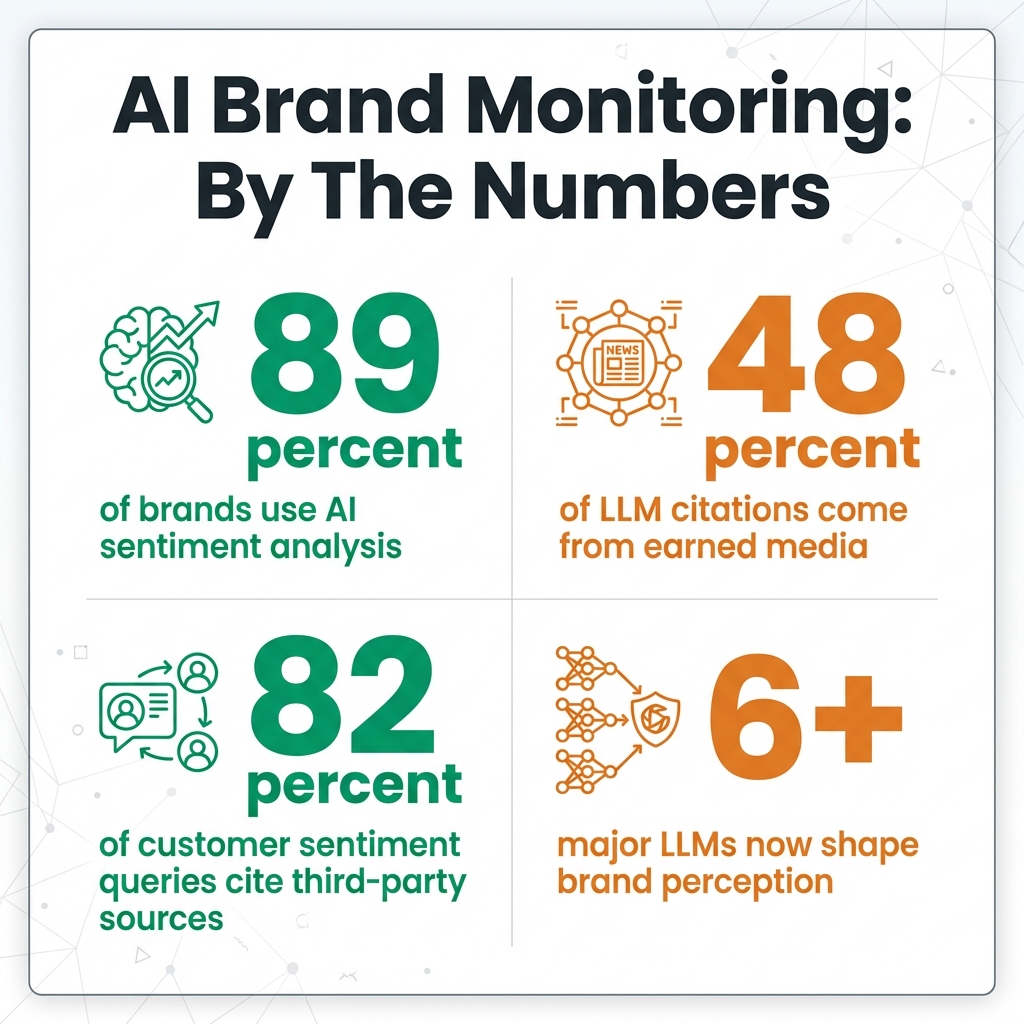

- 82% of customer sentiment queries in AI responses cite third-party sources, not your own content

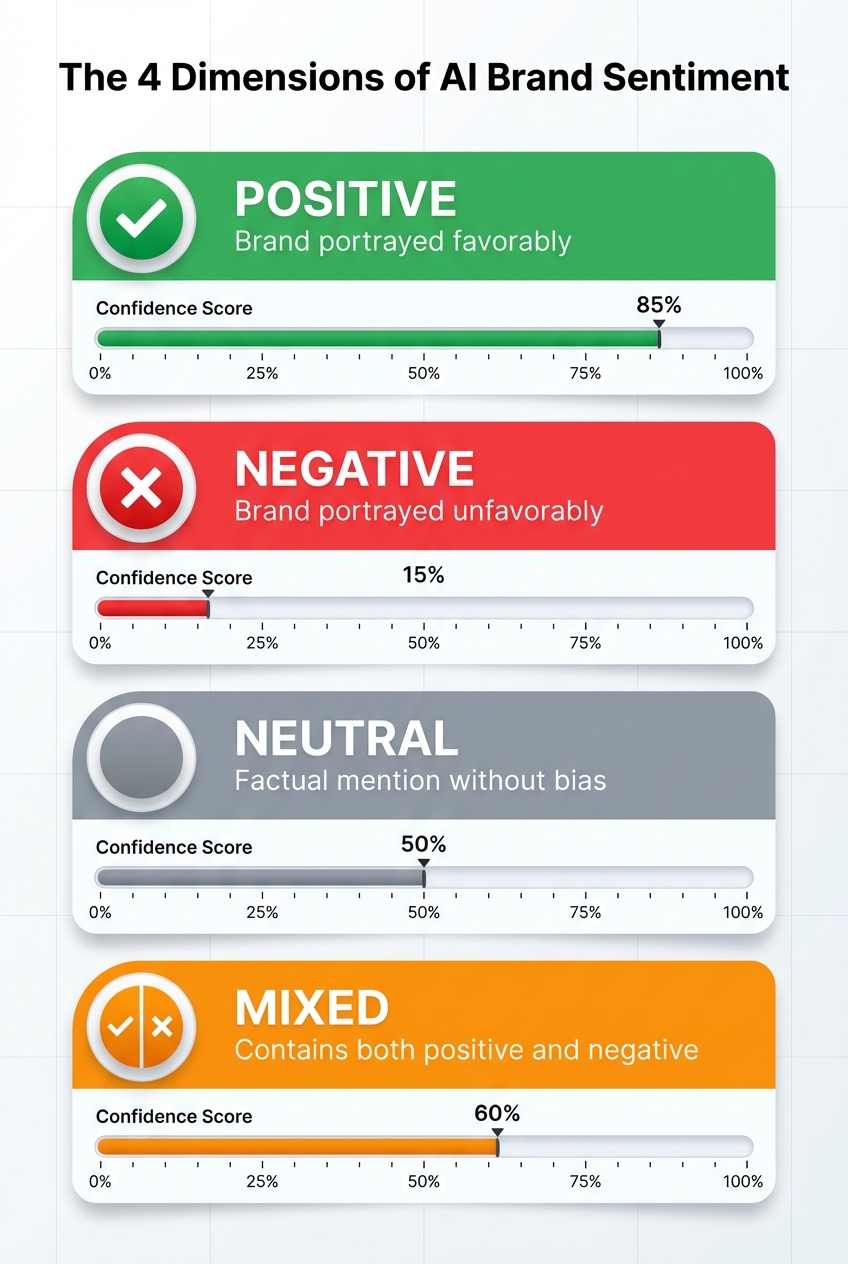

- Four-dimensional sentiment scoring (positive, negative, neutral, mixed) provides nuanced brand perception insights

In This Article:

Your brand’s reputation used to live in Google search results, social media mentions, and customer reviews. That world still exists. But a new arena has emerged where brand perception forms in real-time, shaping purchasing decisions before users ever visit your website.

That arena is AI-generated answers.

When a potential customer asks ChatGPT “What’s the best CRM for small businesses?” or prompts Perplexity with “Which marketing agency should I hire in Austin?”, they receive an AI-generated response that either mentions your brand favorably, mentions it critically, or doesn’t mention it at all.

This is AI brand sentiment: how large language models perceive, describe, and recommend your brand when users ask questions.

What Is AI Brand Sentiment?

AI brand sentiment refers to the tone, context, and framing that large language models (LLMs) apply when discussing your brand in their responses. Unlike traditional sentiment analysis that monitors social media posts or customer reviews, AI brand sentiment measures how AI systems themselves characterize your business. Think of it as your brand’s reputation inside the AI’s “mind.” When someone asks an LLM about your industry, your competitors, or your specific company, the AI draws from its training data and retrieval sources to form a response. That response carries sentiment, whether explicit (“Brand X is known for excellent customer service”) or implicit (“While Brand X offers competitive pricing, users often report…”). The four core dimensions of AI brand sentiment:- Mentions – Does your brand appear when users ask relevant questions?

- Sentiment – What tone does the AI apply when discussing your brand?

- Accuracy – Are the details about your brand correct and current?

- Context – How is your brand framed relative to alternatives?

Why AI Brand Sentiment Matters Now

The shift from search engines to AI assistants represents a fundamental change in how consumers discover and evaluate brands. This isn’t a future prediction. It’s happening now. AI is becoming the first touchpoint for brand discovery. When users ask ChatGPT for product recommendations, they often trust the response without clicking through to verify. The AI’s characterization of your brand becomes the user’s first impression. LLMs aggregate and interpret your entire digital footprint. Every mention of your brand across the web, from news articles to Reddit threads to review sites, feeds into how AI models perceive you. A negative review from 2019 might still influence how Claude describes your company in 2026. Your competitors are already being discussed. Even if users don’t ask about you specifically, AI responses to industry questions often name-drop brands. If your competitor appears in favorable contexts while you’re absent or criticized, you’re losing share of voice in AI-mediated conversations.

How LLMs Form Opinions About Your Brand

Understanding how AI models develop their perspective on your brand is essential for improving it. LLMs don’t have opinions in the human sense, but they do have biases built into their training data and retrieval patterns.Training Data: The Foundation Layer

Every LLM is trained on a massive corpus of text from the internet. This includes:- News articles and press releases

- Blog posts and industry publications

- Social media discussions (Reddit is heavily represented)

- Review sites and forums

- Wikipedia and knowledge bases

- Academic and technical documentation

Retrieval-Augmented Generation: Real-Time Context

Modern LLMs like Perplexity and ChatGPT with browsing capabilities don’t rely solely on training data. They retrieve current information from the web when answering questions. This is both an opportunity and a risk:- Opportunity: Recent positive coverage can improve sentiment quickly

- Risk: A single viral negative article can immediately affect how AI discusses your brand

Source Preferences: Where AI Gets Its Information

Not all sources carry equal weight. Research analyzing over 23,000 AI citations reveals distinct patterns:| Source Type | Share of Citations | When Used Most |

|---|---|---|

| Earned Media | 48% | Customer opinion queries |

| Commercial Content | 30% | Feature/pricing queries |

| Owned Brand Content | 23% | Direct brand queries |

The Four Dimensions of AI Brand Sentiment

Binary sentiment analysis (positive vs. negative) fails to capture the nuance of how AI actually discusses brands. A more sophisticated approach uses four-dimensional confidence scoring.1. Positive Sentiment

The AI portrays your brand favorably. This includes:- Direct praise (“known for excellent customer service”)

- Favorable comparisons (“offers better value than competitors”)

- Recommendations (“a top choice for small businesses”)

- Positive attribute associations (“reliable,” “innovative,” “trusted”)

2. Negative Sentiment

The AI portrays your brand unfavorably. This includes:- Direct criticism (“struggles with customer support”)

- Unfavorable comparisons (“more expensive than alternatives with fewer features”)

- Warnings or caveats (“users report frequent issues with…”)

- Negative attribute associations (“outdated,” “overpriced,” “difficult”)

3. Neutral Sentiment

The AI mentions your brand factually without clear bias. This includes:- Objective descriptions (“founded in 2015, headquartered in Austin”)

- Feature listings without value judgments

- Inclusion in lists without comparative commentary

- Statistical mentions (“serves over 10,000 customers”)

4. Mixed Sentiment

The AI expresses both positive and negative sentiment in the same response. This is more common than pure positive or negative:- “Brand X offers competitive pricing but has a steep learning curve”

- “While known for innovation, customers report inconsistent support”

- “Strong feature set, though the interface feels dated”

Monitoring Sentiment Across Multiple LLMs

Here’s what makes AI brand sentiment particularly challenging: different LLMs can perceive the same brand completely differently. ChatGPT might describe your company favorably based on strong Wikipedia presence and positive news coverage. Claude might express skepticism based on Reddit discussions in its training data. Perplexity, with its real-time web retrieval, might surface a recent negative review that other models miss entirely.Why LLM-Specific Monitoring Matters

Each major AI model has:- Different training data cutoffs – What each model “knows” varies

- Different source preferences – Some weight news heavily; others favor forums

- Different retrieval capabilities – Real-time vs. static knowledge

- Different response styles – Some are more cautious; others more assertive

| LLM | Key Characteristics | Monitoring Priority |

|---|---|---|

| ChatGPT | Largest user base, conversational tone | Critical |

| Perplexity | Real-time web retrieval, citation-heavy | High |

| Gemini | Google integration, commercial context | High |

| Claude | Thoughtful, nuanced responses | Medium-High |

| Grok | X/Twitter integration, current events focus | Medium |

| DeepSeek | Growing market share, technical audience | Medium |

Sentiment Variance by Provider

Tracking sentiment by provider reveals which AI models favor your brand and which don’t. This isn’t about “fixing” an AI’s opinion. It’s about understanding where your brand’s digital footprint creates favorable or unfavorable impressions. Example insight: If ChatGPT consistently scores your brand at 0.72 positive while Claude scores 0.45, investigate what sources each model emphasizes. ChatGPT might weight your strong press coverage; Claude might surface Reddit criticism more heavily.Track your brand’s AI sentiment across ChatGPT, Gemini, Claude, Perplexity, and more

Strategies to Improve How AI Talks About You

Improving AI brand sentiment isn’t about gaming the system. It’s about ensuring your brand’s digital presence accurately reflects your value proposition. Here are actionable strategies:1. Audit Your Earned Media Presence

Since earned media drives 48% of LLM citations (and 82% for customer opinion queries), your third-party presence matters more than your own website content. Action items:- Monitor and respond to reviews on major platforms (TrustPilot, G2, Capterra)

- Engage constructively in Reddit discussions about your industry

- Pursue press coverage in publications LLMs are likely to cite

- Address negative reviews with substantive responses, not defensive dismissals

2. Optimize Your Wikipedia Presence

Wikipedia carries significant weight in LLM training data. If your company qualifies for a Wikipedia page:- Ensure information is accurate and current

- Add citations to authoritative sources

- Avoid promotional language (Wikipedia editors will remove it)

- Keep the page updated with significant company developments

3. Create Authoritative, Citable Content

LLMs cite sources they perceive as authoritative. Content that earns citations:- Original research with specific data points

- Industry reports with novel findings

- Expert commentary on trending topics

- Comprehensive guides that become go-to resources

4. Monitor Competitor Sentiment

Understanding how AI talks about your competitors reveals:- What attributes AI associates with category leaders

- Gaps in competitor coverage you can fill

- Negative sentiment patterns you can avoid

- Positioning opportunities in AI-generated comparisons

5. Address Accuracy Issues Proactively

AI models sometimes present outdated or incorrect information about brands. When you identify inaccuracies:- Update your official sources with correct information

- Create content that directly addresses common misconceptions

- For retrieval-based models (Perplexity), ensure your website contains accurate, easily parseable information

6. Build Sentiment Over Time

AI brand sentiment isn’t fixed. It evolves as:- New content enters training data during model updates

- Retrieval-based models surface recent coverage

- Your brand’s digital footprint expands

- Whether recent initiatives are improving perception

- Seasonal or event-driven sentiment fluctuations

- The impact of specific campaigns or press coverage

Measuring and Reporting AI Brand Sentiment

Effective AI brand sentiment monitoring requires structured measurement and regular reporting. Here’s how to build a measurement framework:Key Metrics to Track

1. Sentiment Score by Entity Track separate scores for your brand and each competitor. A useful formula: Net Sentiment Score = Average Positive – Average Negative This produces a score from -1.0 (entirely negative) to +1.0 (entirely positive), with 0 being neutral. 2. Sentiment Distribution Track the percentage of responses falling into each category:- % Positive

- % Negative

- % Neutral

- % Mixed

| Provider | Positive | Negative | Net Score |

|---|---|---|---|

| ChatGPT | 68% | 12% | +0.56 |

| Gemini | 62% | 18% | +0.44 |

| Claude | 55% | 22% | +0.33 |

| Perplexity | 71% | 8% | +0.63 |

- Marketing campaigns

- Product launches

- Press coverage

- Competitor activity

Reporting Cadence

Weekly: Quick sentiment pulse check across all LLMs. Flag any significant drops. Monthly: Detailed analysis including:- Sentiment by provider

- Competitor comparison

- Trend analysis

- Notable response examples (positive and negative)

- Correlation with business metrics

- ROI of sentiment improvement initiatives

- Competitive positioning shifts

- Recommendations for next quarter

Tools for AI Brand Sentiment Monitoring

Manual monitoring across multiple LLMs is impractical at scale. Specialized tools like Cairrot offer:- Multi-LLM tracking across ChatGPT, Gemini, Claude, Perplexity, Grok, and DeepSeek

- Automated sentiment classification with four-dimensional confidence scoring

- Provider-specific breakdowns showing which AI models favor your brand

- Time-series analysis tracking sentiment evolution over days, weeks, and months

- Competitor benchmarking comparing your sentiment against industry peers

Summary

AI brand sentiment represents a new dimension of brand management that most companies are only beginning to address. The brands that monitor, measure, and actively manage how AI talks about them will maintain competitive advantage as AI-mediated discovery becomes the norm. Start with these steps:- Audit your current AI brand sentiment across major LLMs (ChatGPT, Gemini, Claude, Perplexity)

- Identify sentiment gaps between AI perception and your actual brand value

- Prioritize earned media since LLMs heavily weight third-party sources

- Track sentiment trends weekly to catch issues early and measure improvement

- Compare against competitors to understand your relative positioning in AI responses

Author

-

Connor Kimball is an SEO and AEO expert specializing in organic growth strategies for SaaS, ecommerce, industrial, legal, and finance companies. He is the founder of Cairrot and still maintains an active roster of clients. He shares data studies, field observations, and useful guides for marketers looking to improve their visibility in AI search engines.